The European Union’s AI Act sets a global precedent for artificial intelligence (AI) regulation. The document imposes detailed obligations for all entities developing or deploying AI that affects persons in the EU, with major fines for noncompliance. To meet the requirements in time, companies must quickly establish organization-wide AI governance and capabilities. Putting in the work now to understand different AI categories, regulatory timelines, and intricacies of the AI Act will prepare organizations to innovate with purpose in an AI-driven world.

What companies need to know about the EU AI Act

The EU AI Act is a pioneering legislation that sets out the rules for the use of artificial intelligence systems in the EU, where the definition of AI extends to include all computational modeling. The legislation complements existing EU law on nondiscrimination with specific requirements aimed at minimizing the risk of algorithmic bias — defined by the EU as any systematic error in the outcome of algorithmic operations that places privileged groups at an advantage over unprivileged groups. The AI Act imposes obligations throughout the AI system lifecycle, from the datasets used to develop AI to risk management practices and human oversight requirements.

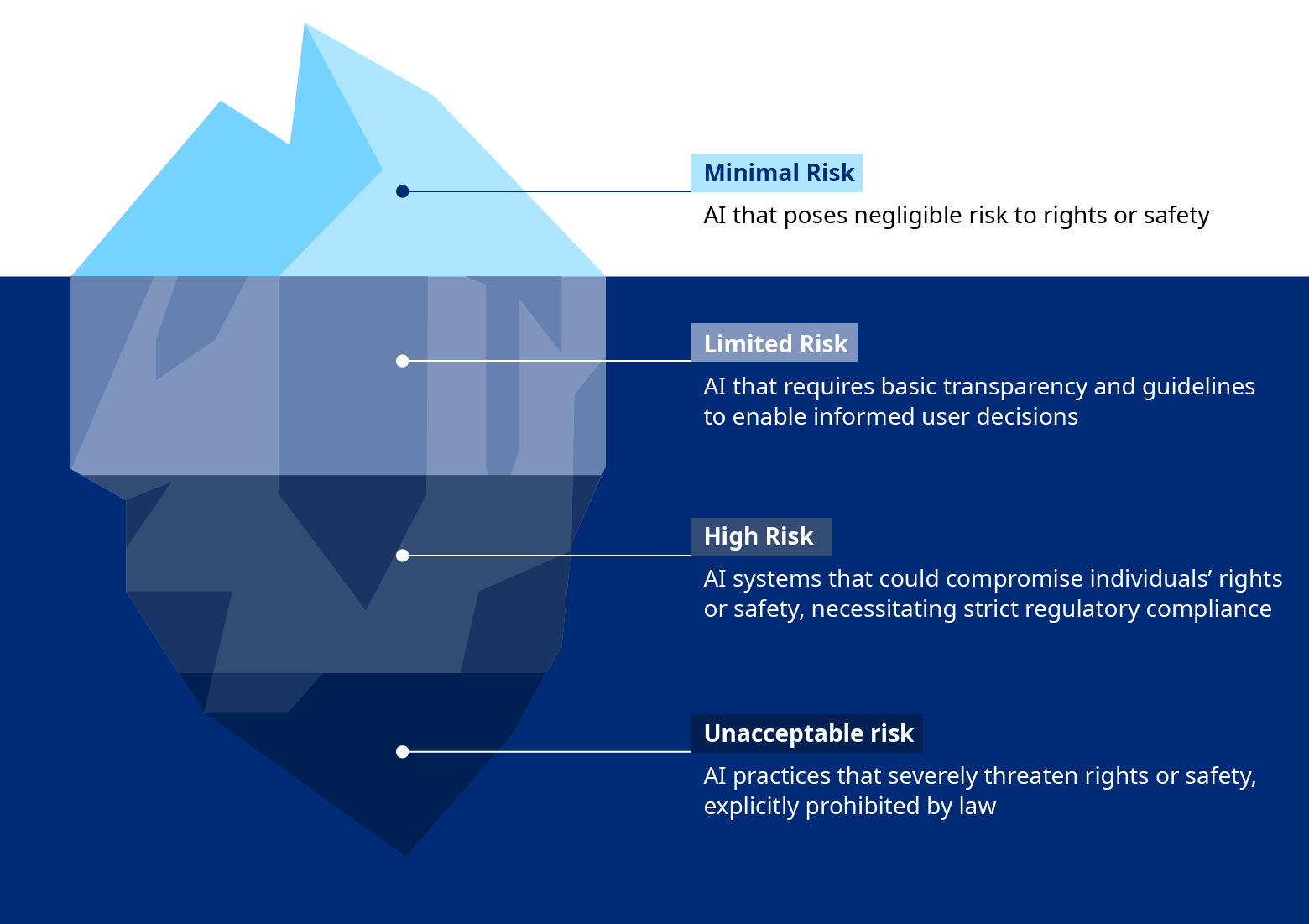

The act sets out AI governance requirements based on four risk severity categories, with an additional designation for systemic risk general purpose AI (GPAI):

RISK SEVERITY AND GOVERNANCE REQUIREMENTS

Minimal risk: AI applications that pose negligible risk to rights or safety, and promote user engagement and innovation. Examples include spam filters and AI-enabled video games. Encourages a code of conduct for these AI use cases.

Limited risk: AI systems requiring transparency so users can make informed decisions and are aware that they are interacting with an AI. Examples include chatbots and image generators. Transparent labeling and a code of conduct for the deployment of AI in interactions with people to ensure end-user awareness and safety.

High risk: AI systems with significant potential to affect individuals' rights or safety, necessitating strict compliance with regulatory standards. Examples include credit decisioning, employment, border control, and biometrics. These systems require defined governance architecture, including but not limited to risk management systems, data governance, documentation, record keeping, testing, and human oversight.

Unacceptable risk: AI practices that threaten fundamental rights or pose severe safety risks. Examples include some types of biometric categorization, social scoring, and predictive policing. These practices are explicitly prohibited by law.

GPAI systematic risk: AI systems designed for broad use across various functions such as image and speech recognition, content and response generation, and others. GPAI models are considered systemic risk when the cumulative amount of compute used for training exceeds 1025 FLOPS (Floating Point Operations Per Second, which is a measure of computing power). It is mandatory to conduct model evaluations and adversarial testing, track and report serious incidents, and ensure cybersecurity protections.

Why the EU AI Act applies to you

Although developers bear the primary responsibilities for AI systems, users (also called deployers) of AI systems have their own set of obligations. Additional obligations apply specifically to developers of GPAI models.

These obligations on developers and deployers will be enforced through unprecedented penalties. The EU AI Act imposes fines for noncompliance based on percentage of worldwide annual turnover, underscoring the substantial implications for global companies of the EU’s stand on AI safety:

- For prohibited AI systems — fines can reach 7% of worldwide annual turnover or €35 million, whichever is higher.

- For high-risk AI and GPAI transparency obligations — fines can reach 3% of worldwide annual turnover or €15 million, whichever is higher.

- For providing incorrect information to a notified body or national authority — fines can reach 1% of worldwide annual turnover or €7.5 million, whichever is higher.

The EU AI Act’s enforcement timeline

The EU AI Act was approved in the European Parliament on March 13, 2024, with a legal and linguistic corrigendum issued on April 16 and debated on April 18. The document must pass through a few more stages — a second adoption vote and final approval by the Council of the European Union — before being published in the EU Official Journal and entering into force 20 days later. Publication is expected by the end of May.

The AI Act’s official publication will trigger several deadlines for compliance, ranging from only six months for prohibited AI systems to 24-48 months for different classes of high-risk systems.

AI Act extensions and exemptions aim for balance

The AI Act includes several extensions for systems already on the market before their category’s date of enforcement (see timeline above). These extensions include an additional 24 months for all existing GPAI systems to comply, and an additional 48 months for existing high-risk large-scale IT systems and systems used by public authorities.

Taking things further, all other existing high-risk systems are exempt from AI Act obligations until they undergo a "significant change." This would mean that high-risk systems updated just before the 24- or 36-month enforcement date are free from high-risk compliance until a significant change is made. This exemption could incentivise companies to act fast to launch or update important AI systems. It also raises pressing questions about what constitutes a significant change, questions which will be debated over coming months.

Another exemption, clarified in the April 2024 text, is given to AI systems released under free and open-source licenses if they aren’t classified as high-risk or prohibited systems, or trigger a need for heightened transparency.

In establishing these provisions, the EU is easing the regulatory burden by providing a helpful transition period for existing AI systems and allowing minor modifications without triggering an immediate need for high-risk compliance. This flexible approach acknowledges the complexity and potential cost of applying demanding standards to umbrella categories of AI systems, especially systems that are deeply integrated into current operations or infrastructure. However, the ambiguity of potentially indefinite exemptions necessitates clear guidance from regulators to prevent exploitation of loopholes.

The implementation of these exemptions will require careful monitoring and clarification to ensure that the objectives of the AI Act — ensuring ethical use and public safety — are not undermined. As the AI landscape continues to evolve, industry and policymakers will need to work together to address potential loopholes and other emerging challenges.